NVIDIA’s latest technologies, particularly the Blackwell architecture and its powerful configurations, are at the forefront of AI supercomputing. While the official TOP500 list primarily ranks systems by traditional HPC benchmarks (Linpack), the true “AI supercomputers” are often custom-built clusters optimized for deep learning, generative AI, and large language models.

Here are the top AI supercomputers and leading systems powered by NVIDIA’s latest technologies:

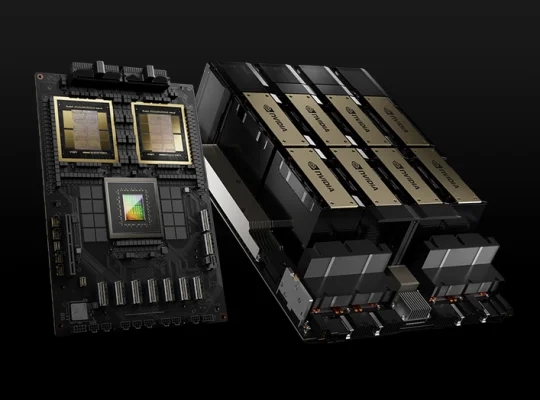

1. NVIDIA Blackwell-Powered AI Factories (GB200, GB300, Blackwell Ultra Systems):

These are the absolute cutting-edge, designed from the ground up for the most demanding AI workloads. NVIDIA’s Blackwell architecture is dominating the AI infrastructure market.

CoreWeave’s GB300 NVL72 Clusters: CoreWeave, a leading AI cloud service provider, is among the first to deploy systems built on NVIDIA’s GB300 NVL72 platform. These clusters utilize Blackwell Ultra Superchips (GB300), which feature:

- 72 Blackwell Ultra GPUs and 36 Arm-based 72-core Grace CPUs per rack.

- Each rack delivers 1.1 ExaFLOPS of dense FP4 inference and 0.36 ExaFLOPS of FP8 training performance, which is 50% higher than the GB200 NVL.

- Equipped with 20 TB of HBM3e and 40 TB of total RAM per rack.

- Leverage NVIDIA Quantum-X800 InfiniBand switches and ConnectX-8 SuperNICs for incredibly fast scale-out connections.

- These systems are designed to power next-generation AI, particularly for LLMs and agentic AI.

NVIDIA DGX SuperPODs with Blackwell Ultra: NVIDIA itself is building and offering DGX SuperPODs featuring Blackwell Ultra GPUs (B300). These are “out-of-the-box AI supercomputers” for enterprises to build their own AI factories.

- DGX GB300 systems: Feature Grace Blackwell Ultra Superchips (36 NVIDIA Grace CPUs and 72 NVIDIA Blackwell Ultra GPUs) in a liquid-cooled rack-scale architecture for real-time agent responses on advanced reasoning models. They offer up to 70x more AI performance for reasoning and 38TB of fast memory compared to Hopper-based systems.

- DGX B300 systems: Air-cooled platforms using NVIDIA Blackwell Ultra GPUs, delivering 11x faster AI inference and 4x faster training compared to Hopper.

- These SuperPODs can scale to tens of thousands of Grace Blackwell Ultra Superchips, connected via NVIDIA NVLink, Quantum-X800 InfiniBand, and Spectrum-X Ethernet networking.

- Cloud giants like AWS, Microsoft Azure, and Google Cloud are integrating NVIDIA’s GB200 and GB300 systems into their global networks to power their AI offerings.

2. Major Hyperscale AI Clusters (Often NVIDIA H100/H200 based, transitioning to Blackwell):

While Blackwell is the future, many of the largest operational AI supercomputers in early-to-mid 2025 are still heavily reliant on the previous-generation NVIDIA Hopper H100 and H200 GPUs. These systems are constantly being expanded and upgraded.

- xAI’s Colossus (Memphis): This cluster, designed for xAI’s Grok model, is reported to be one of the largest, with an estimated 200,000 NVIDIA H100 chip equivalents. It aims for immense compute power, capable of training models like GPT-3 in under two hours.

- Meta’s AI Infrastructure: Meta has been a massive investor in NVIDIA GPUs, building extensive clusters with H100 GPUs (reported to be 100,000 H100 equivalents in some configurations) for training its foundational AI models like Llama.

- Microsoft/OpenAI (Goodyear Cluster): A substantial AI compute cluster, reportedly featuring 100,000 H100 equivalents, used for training and deploying advanced OpenAI models. Microsoft’s Azure cloud also heavily features NVIDIA H100 and H200 instances.

- Oracle’s H200-based Machine: Oracle Cloud Infrastructure (OCI) is building large-scale AI infrastructure, including systems powered by NVIDIA H200 GPUs.

- JUPITER (Germany): A significant European supercomputer project using NVIDIA Grace Hopper (GH200) Superchips (a combination of Grace CPU and Hopper H100 GPU). It will have over 23,536 GH200 Superchips, making it a powerful system for both HPC and AI, and is expected to be a top contender on the TOP500 list for AI capabilities.

- Eagle (Microsoft Azure): A large system using 14,400 NVIDIA H100 GPUs, with a substantial HPL score, indicating its strong general-purpose supercomputing capabilities alongside AI.

- Alps (Switzerland): Another significant system leveraging NVIDIA Grace Hopper (GH200) Superchips with 10,400 GH200 units, focusing on scientific research and AI.

- ABCI-Q (Japan): Delivered by Japan’s National Institute of Advanced Industrial Science and Technology (AIST), ABCI-Q features 2,020 NVIDIA H100 GPUs and is the world’s largest research supercomputer dedicated to quantum computing with integrated AI supercomputing capabilities.

- NVIDIA Eos: NVIDIA’s own internal AI supercomputer, built with 576 NVIDIA DGX H100 systems and Quantum-2 InfiniBand networking, delivering 18.4 exaflops of AI performance. This system is used for NVIDIA’s own AI research and development.

3. Personal AI Supercomputers and Workstations (Blackwell-powered):

NVIDIA is also bringing Blackwell technology to more accessible form factors for individual developers and smaller teams.

- NVIDIA DGX Spark (formerly Project DIGITS): Billed as the “world’s smallest AI supercomputer,” featuring the NVIDIA GB10 Grace Blackwell Superchip. It offers a petaflop of AI performance for prototyping and fine-tuning large AI models from a desktop.

- NVIDIA DGX Station with GB300: The first desktop system built with the NVIDIA GB300 Grace Blackwell Ultra Desktop Superchip, offering massive coherent memory and optimized for enterprise AI development.

- ASUS ExpertCenter Pro ET900N G3: One of the first desktop systems powered by NVIDIA’s GB300 Grace Blackwell Ultra “desktop superchip”, bringing server-rack level AI power to a workstation.

The landscape of AI supercomputing is rapidly evolving, with NVIDIA’s Blackwell architecture quickly becoming the new benchmark for performance and efficiency in large-scale AI training and inference. Existing Hopper-based systems remain powerful, but new deployments are heavily favoring Blackwell.