As of mid-2025, NVIDIA continues to dominate the AI supercomputing landscape with its cutting-edge GPU architectures. The focus has decisively shifted towards the Blackwell architecture, with its various iterations designed for both enterprise data centers and professional workstations.

Here are the best NVIDIA GPUs for AI supercomputing in 2025:

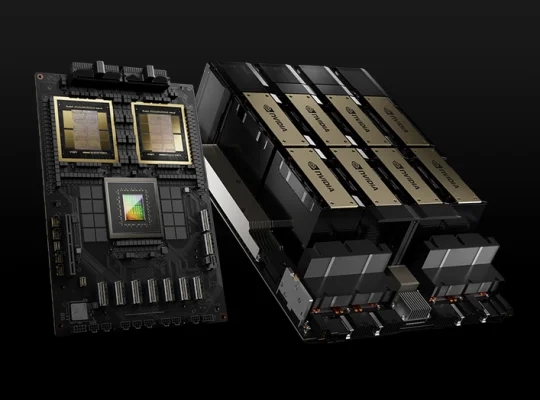

1. NVIDIA Blackwell GPUs (B100, B200, B300, GB200, Blackwell Ultra)

The absolute top choice for large-scale AI training and inference. Blackwell is the successor to Hopper (H100/H200) and represents a massive leap in performance and efficiency.

Key Innovations:

- GB200 Grace Blackwell Superchip: This is a cornerstone for AI supercomputing, integrating two Blackwell GPUs with the Grace CPU on a single module. This design dramatically reduces data transfer bottlenecks and increases overall system throughput.

- Blackwell Ultra (B300): This is the most powerful variant of the Blackwell architecture announced, offering significant performance increases over earlier Blackwell chips (like the B100/B200) and even more so over Hopper. It boasts up to 30 PFLOPS of FP4 Tensor Core performance.

- Fifth-generation Tensor Cores: Enhanced to support new FP4 and MXFP6 data types, doubling performance for next-gen AI models while maintaining accuracy through “micro-tensor scaling.”

- Second-generation Transformer Engine: Specifically optimized for the demanding computations of large language models (LLMs).

- Massive Memory Capacity and Bandwidth: Blackwell GPUs like the B200 feature up to 192GB of HBM3e VRAM with 8 TB/s memory bandwidth, crucial for handling massive AI models and datasets. The B300 is expected to reach 288GB of HBM3e memory.

- NVLink and Interconnect: Blackwell systems leverage NVIDIA NVLink, NVIDIA Quantum-X800 InfiniBand, and NVIDIA Spectrum-X Ethernet networking to connect tens of thousands of GPUs, creating vast AI factories capable of training trillion-parameter models.

- Dedicated Decompression Engine: Accelerates data loading and analytics, speeding up end-to-end data pipelines.

- Reliability, Availability, Serviceability (RAS) Engine: Utilizes AI-powered predictive analytics to monitor hardware and diagnose faults early, maximizing uptime for large-scale deployments.

- Secure AI and Confidential Computing: First GPUs with TEE-I/O support for end-to-end encryption of data in use, critical for privacy-sensitive industries.

Use Cases: Pre-training and fine-tuning of next-generation LLMs (e.g., Llama 3.1 405B), real-time inference for agentic AI, large-scale vision transformers, scientific simulations, and general high-performance computing (HPC) tasks.

Availability: Blackwell-powered systems like the DGX GB200 NVL72 and DGX SuperPODs built with Blackwell Ultra GPUs are central to NVIDIA’s enterprise AI offerings in 2025.

2. NVIDIA Hopper GPUs (H200, H100 SXM/PCIe)

While Blackwell is the new king, Hopper GPUs remain highly capable and widely deployed for AI supercomputing. They offer a balance of high performance and established ecosystem support.

NVIDIA H200: This is the immediate predecessor to Blackwell, featuring 141GB of HBM3e memory, offering a significant boost in memory capacity and bandwidth over the H100. It’s excellent for running large models that might strain the H100’s memory limits.

NVIDIA H100 (SXM/PCIe): The H100, particularly the SXM variant with its higher NVLink bandwidth, is a powerhouse for AI model training with billions of parameters. The PCIe version offers more flexibility for integrating into existing server infrastructures.

Use Cases: Training large transformer models (GPT-4, Llama 3.1/3.3), fine-tuning domain-specific models, vision-language training, and distributed training of diffusion models.

Considerations: While still excellent, new Blackwell deployments will likely opt for the latest architecture due to its superior performance per watt and future-proofing. However, H100s might be more readily available or offer a better cost-efficiency on the secondary market.

3. NVIDIA RTX PRO Blackwell Series (e.g., RTX PRO 6000 Blackwell Workstation Edition)

While not designed for massive data center supercomputing clusters, the RTX PRO Blackwell series is crucial for individual researchers, developers, and smaller AI labs needing powerful local AI compute.

Key Features: These professional workstation GPUs benefit from the Blackwell architecture’s advancements in Tensor Cores, memory capacity (e.g., 96GB of GPU memory on the RTX PRO 6000 Blackwell), and improved efficiency.

Use Cases: Prototyping, fine-tuning large language models locally, high-fidelity AI-powered rendering and visualization, and accelerating professional AI, graphics, and simulation workloads on a workstation.

Project DIGITS / DGX Spark: NVIDIA has even announced “personal AI supercomputers” like the DGX Spark, which is a desktop unit powered by a Blackwell GPU (like the GB10 Grace Blackwell Superchip) designed for local LLM inference and fine-tuning.

Looking Ahead:

NVIDIA has already hinted at future architectures beyond Blackwell, with Vera Rubin expected to deliver even greater performance (e.g., 3.3x the performance of Blackwell Ultra for 50 PFLOPS of dense FP4 compute) and feature HBM4 memory, further solidifying NVIDIA’s leadership in the AI supercomputing space for years to come.

When choosing the “best” GPU for AI supercomputing in 2025, the NVIDIA Blackwell architecture (specifically the GB200 and Blackwell Ultra variants) is the clear frontrunner for top-tier, large-scale deployments due to its unparalleled performance, memory, and scalability features. For more accessible or workstation-level AI supercomputing, the RTX PRO Blackwell series offers formidable capabilities.