Nvidia has long been the dominant force in the AI chip market, largely due to its powerful GPUs and the established CUDA software ecosystem. However, competitors like AMD and Intel are making significant strides, offering compelling alternatives with unique strengths.

Here’s a comparison of Nvidia AI chip performance against its key competitors:

Nvidia: The Established Leader

Key Strengths:

Dominant Market Share: Nvidia holds a significant majority of the AI chip market, especially for training large AI models.

CUDA Ecosystem: Nvidia’s CUDA platform provides a mature, comprehensive, and widely adopted software ecosystem that simplifies AI development and deployment. This “software moat” is a major competitive advantage.

Performance Leadership (H100/H200, Blackwell):

Nvidia H100: Remains a benchmark for AI training and inference, offering high performance in various precision types (FP8, FP16, TF32).

Nvidia H200: An upgraded version of the H100 with significantly more HBM3e memory (141GB) and higher memory bandwidth (4.8TB/s), making it particularly strong for large language model (LLM) inference and memory-bound workloads.

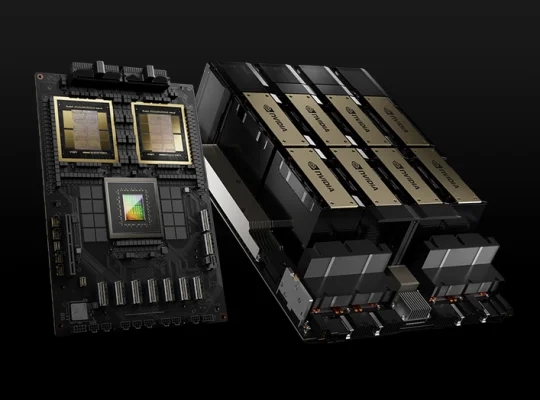

Nvidia Blackwell (B100/B200): Nvidia’s latest generation, the Blackwell architecture, promises substantial performance gains over Hopper (H100/H200). The B200 is expected to deliver even higher compute and memory capabilities, further solidifying Nvidia’s lead.

Integrated Solutions: Nvidia offers full-stack solutions, including GPUs, interconnect technologies (like NVLink and InfiniBand), and software, providing a highly optimized environment for AI workloads.

Considerations:

High Cost: Nvidia’s chips are typically the most expensive option, which can be a barrier for some organizations.

Proprietary Nature: While CUDA is powerful, its proprietary nature can lead to vendor lock-in.

AMD: The Rising Challenger

Key Strengths:

Competitive Hardware (MI300X, MI350 Series):

AMD Instinct MI300X: Designed for generative AI and HPC, it features 192GB of HBM3 memory and 5.3 TB/s of memory bandwidth, which is more memory and bandwidth than Nvidia’s H100. This makes it particularly strong for memory-intensive LLM inference and training, often outperforming the H100 in such scenarios.

AMD Instinct MI350 Series: AMD’s latest, aiming to directly compete with Nvidia’s Blackwell series. Early reports suggest its performance is on par with Nvidia’s current Blackwell chips, offering significant gains over previous generations.

Strong Memory and Bandwidth: AMD often highlights its chips’ superior memory capacity and bandwidth, which are crucial for large AI models.

Open Compute Project (OCP) Compliance: AMD’s approach to OCP-compliant server designs can offer greater flexibility and potentially lower overall system costs compared to Nvidia’s more proprietary HGX systems.

ROCm Ecosystem: AMD’s ROCm software platform is gaining traction as an open-source alternative to CUDA, though it still has a way to go to match CUDA’s maturity and widespread adoption.

Considerations:

Software Maturity: While ROCm is improving, it’s generally considered less mature and user-friendly than CUDA, requiring more “elbow grease” for optimal performance.

Training Performance: While strong in inference, AMD’s training performance, particularly in large-scale distributed training, has historically lagged behind Nvidia due to software optimization and interconnect capabilities (though this is rapidly improving).

Intel: The Determined Entrant

Key Strengths:

Gaudi Series (Gaudi 2, Gaudi 3):

Intel Gaudi 3: Offers significant performance improvements over its predecessor, with twice the FP8 performance and four times the BF16 AI computing power of Gaudi 2. It boasts 128GB of HBM memory and 3.7 TB/s bandwidth.

Price-Performance: Intel often positions Gaudi as a cost-effective alternative, claiming competitive performance with Nvidia’s H100, especially in certain inference workloads, with a better price-to-performance ratio.

Open Standards: Intel emphasizes support for open standards, aiming to provide more flexibility and avoid vendor lock-in.

Enterprise Focus: Gaudi chips are designed for demanding enterprise AI workloads, particularly for inference tasks that require high parallel processing and memory bandwidth, such as LLM text generation and image generation.

Considerations:Later Market Entry: Intel is a relatively newer entrant to the dedicated AI chip market compared to Nvidia and AMD.

Market Share: Intel currently holds a smaller market share in the high-performance AI accelerator space.

Software Ecosystem: While improving, Intel’s AI software stack is still developing compared to the established players.

Other Notable Players (Proprietary Chips)

Amazon (Trainium, Inferentia): Developed for its AWS cloud ecosystem, Trainium excels in cloud-native machine learning training, offering cost-effective scaling. Inferentia is optimized for inference.

Google (TPU): Google’s Tensor Processing Units (TPUs) are custom-designed for AI workloads and are primarily used within Google’s own cloud infrastructure and services.

Cerebras: Focuses on wafer-scale chips for massive AI models, offering unique advantages in parallelism.

Key Performance Metrics for Comparison

When evaluating AI chip performance, consider these crucial metrics:

FLOPS (Floating Point Operations Per Second): Measures raw computational power in various precision types (FP32, FP16, BF16, FP8).

Memory Capacity (HBM): Crucial for accommodating larger AI models and datasets, reducing the need to swap data to slower memory.

Memory Bandwidth: Determines how quickly data can be moved to and from the chip’s memory, impacting overall throughput.

Interconnect Bandwidth: How fast chips can communicate with each other in multi-GPU systems, vital for distributed training.

Time-to-Train: How quickly a model can be trained on a given dataset.

Inference Throughput & Latency: For deployment, how many inferences can be processed per second and how long each inference takes.

Power Efficiency (Performance-per-Watt): Important for operational costs and data center infrastructure.

Software Ecosystem & Tools: The maturity, ease of use, and breadth of libraries, frameworks, and developer tools.

Conclusion for Website Content

“In the rapidly evolving landscape of Artificial Intelligence, the battle for chip supremacy is intensifying. WhileNvidia has long held the crown with its powerful GPUs and the ubiquitous CUDA ecosystem, formidable challengers likeAMD andIntel are rapidly innovating, offering compelling alternatives tailored for diverse AI workloads.

Nvidia’s H100 and the upcoming Blackwell series continue to set benchmarks for raw computational power, especially for large-scale AI model training. The robustCUDA software platform remains a significant advantage, simplifying development and deployment for a vast community of AI researchers and practitioners.

However,AMD’s Instinct MI300X and the new MI350 series are proving to be strong contenders, particularly excelling in memory-intensive generative AI inference due to their ample HBM memory and high bandwidth. AMD’s commitment to open standards and its growingROCm ecosystem offer a viable, and often more cost-effective, alternative.

Intel’s Gaudi series, with the latest Gaudi 3, is making its mark by focusing on strong price-performance for enterprise AI inference and training, emphasizing open solutions and robust networking capabilities.

Choosing the right AI chip depends heavily on your specific workload, budget, and ecosystem preferences. While Nvidia still commands a significant lead in market share and software maturity, the innovation from AMD and Intel is fostering a more competitive and diverse AI hardware landscape, providing more choice and specialized solutions for the future of AI.