NVIDIA’s Blackwell GPU architecture represents a monumental leap over its predecessor, Hopper, in virtually every aspect of AI and high-performance computing. While Hopper (H100/H200) was a groundbreaking architecture that propelled the early generative AI boom, Blackwell is purpose-built to tackle the next wave of trillion-parameter models and AI factories.

Performance Comparison (AI Focus)

Blackwell delivers unprecedented performance, particularly for generative AI workloads:

1. AI Training Performance

Hopper: Offered significant speedups over Ampere, driving the initial phase of large language model training.

Blackwell: Provides ~3-4x faster training speedups for LLMs compared to H100. For example, in MLPerf Training benchmarks, Blackwell has shown2x performance for GPT-3 pre-training and2.2x for Llama 2 70B fine-tuning on a per-GPU basis. The enhanced Transformer Engine and improved memory bandwidth are key contributors.

2. AI Inference Performance

Hopper: A powerhouse for inference, especially with FP8 precision.

Blackwell: This is where Blackwell truly shines. It can achieve up to15x faster inference performance for LLMs compared to H100, primarily due to the introduction ofFP4 precision. For highly resource-intensive models like 1.8-trillion-parameter Mixture-of-Experts (MoE) models, Blackwell can deliver a30x speedup in real-time inference throughput. The ability to utilize lower precision (FP4) with high accuracy fundamentally transforms the economics and feasibility of deploying massive AI models.

3. Memory Subsystem

Hopper (H100/H200):

H100: 80 GB of HBM3 memory with 3.35 TB/s bandwidth.

H200: 141 GB of HBM3e memory with ~4.8 TB/s bandwidth.

Blackwell (B200): Features a massive192 GB of HBM3e memory with an astounding8 TB/s of bandwidth. This significantly larger capacity and higher bandwidth are critical for handling the ever-growing size of AI models and datasets, reducing memory bottlenecks and enabling single-GPU processing of models that previously required multi-GPU setups.

4. NVLink Scalability

Hopper (NVLink-4): Offered 900 GB/s bidirectional throughput per GPU, allowing scaling to clusters of up to 256 GPUs with NVLink Network.

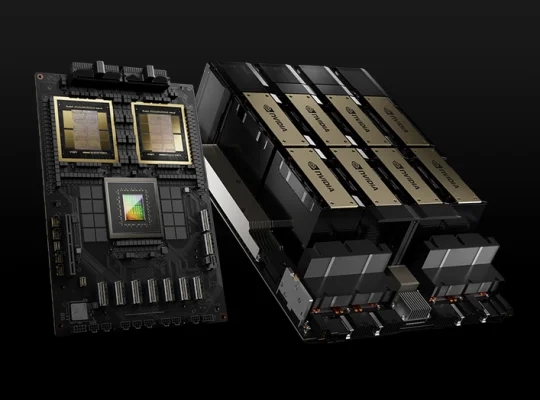

Blackwell (NVLink-5 and NVLink Switch): Doubles the GPU interconnect speed to1.8 TB/s per GPU. More importantly, the new NVLink Switch technology allows for unprecedented scaling, creating massive GPU clusters of up to576 GPUs (e.g., in the GB200 NVL72 system) that function as a single, coherent supercomputer. This eliminates communication bottlenecks and is essential for training and deploying the largest frontier AI models.

Power and Efficiency

Hopper: H100 typically has a Total Graphics Power (TGP) of around 700W.

Blackwell: The B200 GPU has a higher TGP, often configured at1000W (or higher in Grace Blackwell configurations).

Efficiency Gain: Despite the higher absolute power consumption per GPU, Blackwell offerssignificantly greater energy efficiency per inference or training operation. NVIDIA claims up to 25x greater energy efficiency for certain complex generative AI models when comparing full rack systems (e.g., GB200 NVL72 vs. HGX H100). This translates to a dramatic reduction in the total cost of ownership (TCO) and a lower environmental footprint for large-scale AI deployments.

Why Blackwell is a “Quantum Leap”

While Hopper was a fantastic architecture, Blackwell addresses its emerging limitations for the most demanding AI workloads:

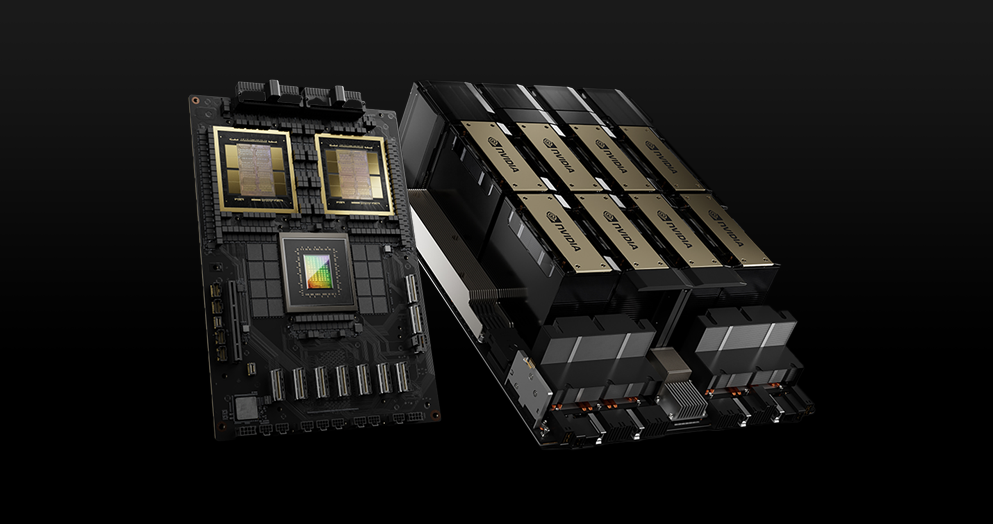

1. Scaling Beyond Reticle Limits: The dual-die design is a clever engineering solution to continue increasing transistor count and performance when single-die designs hit physical manufacturing limitations.

2. Trillion-Parameter Models: The vastly increased memory, bandwidth, and NVLink scalability of Blackwell are explicitly designed to handle models with trillions of parameters, which are becoming the norm for advanced LLMs.

3. Real-Time Generative AI: The introduction of FP4 and massive inference speedups makes real-time, highly interactive generative AI applications, such as sophisticated chatbots, code generation, and content creation, economically feasible.

4. Data Processing Bottlenecks: The dedicated Decompression Engine directly addresses a growing bottleneck in AI pipelines, accelerating data loading and preparation.

In conclusion, while NVIDIA Hopper GPUs remain powerful and widely deployed, Blackwell represents a fundamental architectural shift. It’s not merely an incremental upgrade but a re-engineering of the GPU to meet and anticipate the unprecedented demands of the AI era, delivering performance and scalability that were previously unimaginable.