Nvidia: Revolutionizing AI with Supercomputing Power – The Dawn of the AI Factory

Nvidia stands at the absolute forefront of the Artificial Intelligence revolution, not merely by providing powerful chips, but by designing an entire supercomputing platform that is fundamentally changing how AI is developed, deployed, and scaled. We are moving from a world of isolated AI experiments to an era of AI factories, and Nvidia is building the infrastructure that makes this possible.

The Foundation: Accelerated Computing with GPUs

At the core of Nvidia’s revolution is its pioneering work inGPU (Graphics Processing Unit) computing. GPUs, initially designed for parallel graphics rendering, proved uniquely suited for the parallel processing demands of deep learning algorithms. This fundamental insight led to the creation of the CUDA programming model, unleashing the power of GPUs for general-purpose computation (GPGPU) and, critically, for AI.

This acceleration is vital because modern AI, especially generative AI and Large Language Models (LLMs), requires astronomical amounts of computation. Training a single large LLM can involve processing petabytes of data and trillions of parameters – a task that would take hundreds of years on traditional CPUs, but mere weeks or even days on a GPU-accelerated supercomputer.

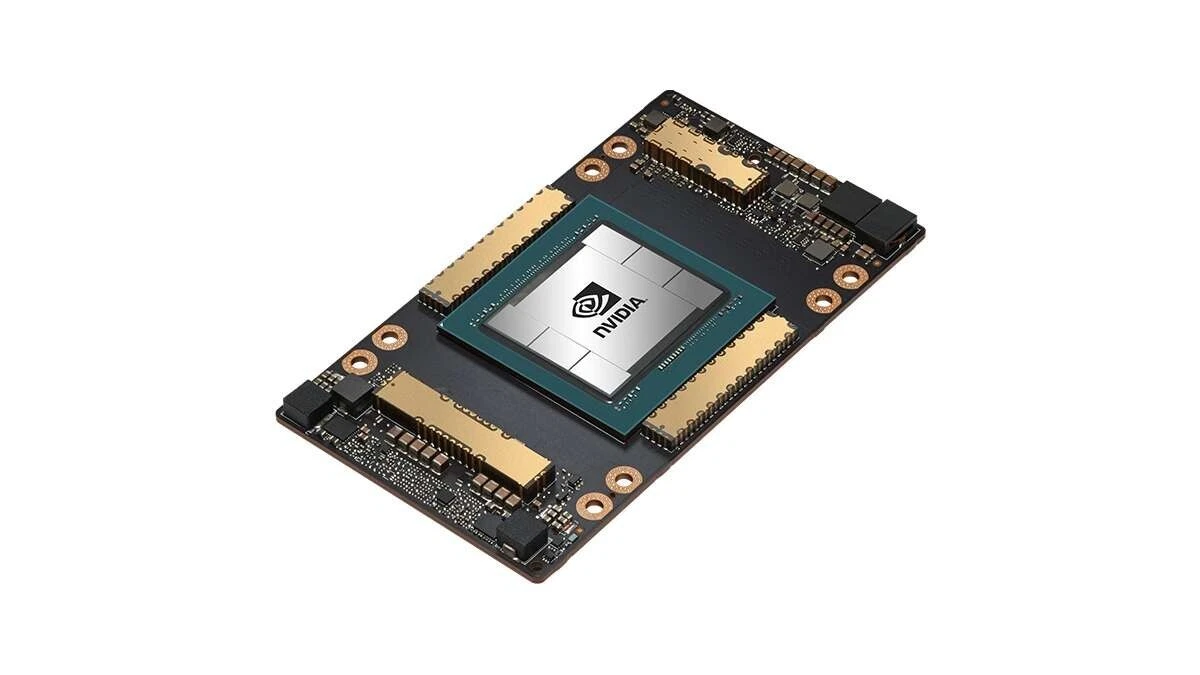

The Blackwell Era: Engineering for the Trillion-Parameter AI

Nvidia’s latest innovation, the Blackwell GPU architecture, is a testament to this relentless pursuit of AI supercomputing power. Blackwell is not just a faster chip; it’s a holistic design for the demands of the most advanced AI.

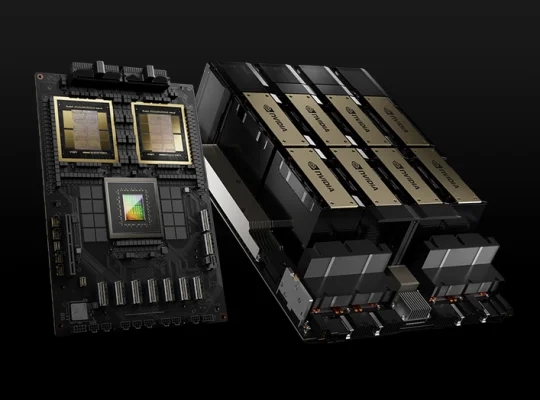

GB200 Grace Blackwell Superchip: This is the pinnacle of Nvidia’s current offering. It integrates two Blackwell GPUs with one energy-efficient Grace CPU on a single superchip. This tight coupling via ultra-fast NVLink-C2C creates a unified, high-bandwidth memory space, eliminating bottlenecks and enabling unprecedented performance for training and inference of trillion-parameter models.

Fifth-Generation Tensor Cores: Blackwell introduces new, ultra-low precision formats (like FP4) while maintaining accuracy through innovative techniques. This dramatically increases throughput, allowing for larger models to be processed faster with less memory.

Second-Generation Transformer Engine: Specifically optimized for the transformer architectures that define LLMs, this engine dynamically adapts to optimize performance for both training and real-time inference, accelerating the core of generative AI.

Fifth-Generation NVLink & NVLink Switch: For the largest AI models, training requires thousands of GPUs to work as one. Blackwell’s NVLink enables 1.8TB/s GPU-to-GPU communication, and the NVLink Switch allows for massive, unified clusters (like the GB200 NVL72, connecting 72 GPUs as a single entity) that scale to train truly colossal models.

Beyond Hardware: The Software and Ecosystem Advantage

Nvidia’s revolution extends far beyond silicon. Its full-stack approach – integrating hardware, software, networking, and services – is what truly empowers AI supercomputing.

CUDA Platform: The foundational software layer that allows developers to harness the power of Nvidia GPUs. Its maturity, extensive libraries (cuDNN, TensorRT), and vast developer community make it the de-facto standard for AI development.

NVIDIA AI Enterprise: A comprehensive software suite that provides optimized frameworks, tools, and pre-trained models, simplifying the development, deployment, and management of AI workloads at scale. This includes the NeMo framework for building, customizing, and deploying generative AI models.

Networking & Infrastructure: High-speed interconnects like Quantum-X800 InfiniBand and Spectrum-X800 Ethernet, combined with technologies like GPUDirect RDMA, ensure that data flows seamlessly between thousands of GPUs and across entire data centers, preventing bottlenecks that could cripple supercomputing performance.

DGX Systems: Nvidia’s purpose-built AI supercomputers, such as the DGX Blackwell systems (like the GB200 NVL72 rack), are pre-integrated, liquid-cooled, and optimized solutions designed for enterprise-grade AI development and deployment. These “AI factories” deliver immense compute in a compact, efficient form factor.

Omniverse and Digital Twins: Nvidia’s platform for building and operating 3D simulations and digital twins is becoming crucial for training AI in virtual environments. This allows for rapid iteration and testing of AI for robotics, autonomous vehicles, and complex industrial processes, reducing real-world costs and risks.

Real-World Impact: Reshaping Industries with AI Supercomputing

Nvidia’s supercomputing power is not just theoretical; it’s driving tangible transformations across every sector:

Healthcare & Life Sciences: Accelerating drug discovery, genomic analysis, and medical imaging by enabling researchers to simulate molecular interactions and analyze vast datasets at unprecedented speeds.

Generative AI & LLMs: Powering the development and deployment of the most advanced LLMs, enabling new applications in content creation, personalized experiences, coding assistance, and knowledge management.

Autonomous Systems: Driving the intelligence behind self-driving cars, robotics, and drones, allowing them to perceive, reason, and act in complex real-world environments.

Scientific Research: Pushing the boundaries of climate modeling, astrophysics, materials science, and more, by enabling highly accurate and complex simulations.

Enterprise & Cloud AI: Delivering the backbone for AI-as-a-service offerings from major cloud providers, making cutting-edge AI accessible to businesses of all sizes.

Nvidia isn’t just selling chips; it’s architecting the very infrastructure of the AI future. By consistently innovating across hardware, software, and networking, Nvidia is empowering organizations worldwide to harness supercomputing power and unleash the full transformative potential of Artificial Intelligence.