Best Nvidia GPUs for AI Supercomputing in 2025

The Reign of Blackwell: Best Nvidia GPUs for AI Supercomputing in 2025

In 2025, when it comes to powering the world’s most demanding AI supercomputers, Nvidia’s Blackwell architecture reigns supreme. Building upon the immense success of the Hopper generation, Blackwell GPUs are specifically engineered to tackle the exponential growth of generative AI, large language models (LLMs), and complex scientific simulations.

For organizations building or utilizing AI supercomputing infrastructure, the choice of Nvidia Blackwell GPUs is not just about raw power, but also about the complete ecosystem of software, networking, and scalability that Nvidia provides.

Here are the top Nvidia GPUs and systems dominating the AI supercomputing landscape in 2025:

1. NVIDIA GB200 Grace Blackwell Superchip

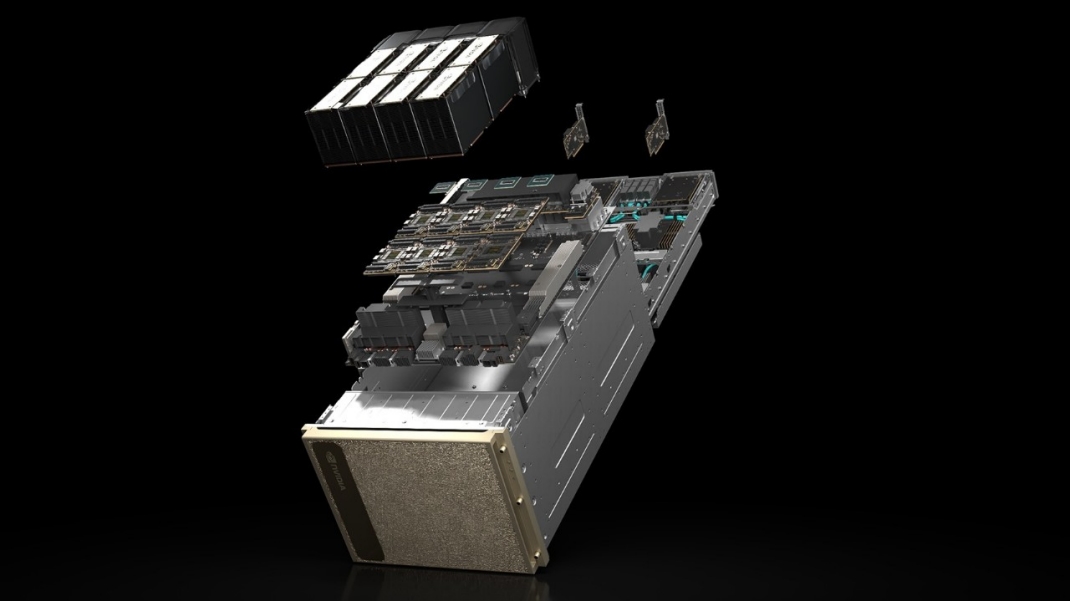

The NVIDIA GB200 Grace Blackwell Superchip is the undisputed flagship for AI supercomputing in 2025. It’s not just a GPU; it’s a revolutionary integration of CPU and GPU designed for unprecedented AI performance and energy efficiency.

What it is: The GB200 combines two Blackwell B200 GPUs with one Nvidia Grace CPU on a single superchip. This tight integration, connected by ultra-fast NVLink-C2C, creates a unified memory domain with massive bandwidth.

Why it’s King for Supercomputing:

Trillion-Parameter Model Training: The GB200 is built to train models with 10 trillion parameters and beyond, making it essential for developing the next generation of AI.

Unparalleled Performance: It delivers orders of magnitude more performance than previous generations, particularly for LLM inference (e.g., up to 30x faster inference performance than H100).

Massive Memory Bandwidth: With up to 896GB of unified accessible memory and incredibly high bandwidth, it eliminates memory bottlenecks for even the largest models.

Energy Efficiency: The Grace CPU’s ARM-based architecture is highly energy-efficient, and the liquid-cooled rack-scale GB200 NVL72 systems drastically reduce power consumption and cost per unit of AI compute.

Key Specs (per GB200 Superchip):

AI Performance: Up to 20 petaFLOPS (FP4) / 10 petaFLOPS (FP8)

GPU Memory: Up to 192GB HBM3e (per B200 GPU, total 384GB for two B200s in GB200)

Memory Bandwidth: Up to 8 TB/s (per B200 GPU)

Interconnect: Fifth-generation NVLink: 1.8TB/s per GPU

2. NVIDIA B200 Tensor Core GPU

The NVIDIA B200 Tensor Core GPU is the core component of the GB200 Superchip, and also available as a standalone GPU for integration into various server platforms.

What it is: The B200 is a dual-die Blackwell GPU packing 208 billion transistors, designed for standalone deployments or as part of multi-GPU servers.

Why it’s Crucial for Supercomputing: It brings the foundational Blackwell architecture’s advancements to a broader range of deployments.

Fifth-Generation Tensor Cores: Native support for FP4, FP6, and FP8 precisions for unparalleled AI acceleration in training and inference.

Second-Generation Transformer Engine: Optimizes performance specifically for the transformer architectures prevalent in LLMs and MoE models.

High Memory Capacity: With 192GB of HBM3e memory, it can house very large models on a single GPU.

Key Specs:

AI Performance: Up to 18 petaFLOPS (FP4) / 9 petaFLOPS (FP8)

GPU Memory: 192GB HBM3e

Memory Bandwidth: 8 TB/s

Interconnect: Fifth-generation NVLink: 1.8TB/s, PCIe Gen6

3. NVIDIA Blackwell Ultra GPUs (Expected 2025/2026)

While the initial Blackwell GPUs (B200, GB200) are already available and shipping in 2025, Nvidia has also unveiled its roadmap for Blackwell Ultra GPUs, expected to arrive later in 2025 or early 2026.

What they are: These are enhanced versions of the Blackwell architecture, designed to push the boundaries of performance and efficiency even further.

Why they’ll be key: Blackwell Ultra GPUs will offer even more horsepower for reasoning models and agentic AI systems, boasting an estimated 50x higher AI factory output compared to the Hopper platform. This continued innovation ensures Nvidia’s sustained leadership in the most compute-intensive AI applications.

Key Systems for AI Supercomputing:

NVIDIA DGX Blackwell Systems: Nvidia’s flagship AI supercomputing systems, built entirely around the Blackwell architecture. The DGX GB200 NVL72 is a prime example, a liquid-cooled, rack-scale system integrating 36 GB200 Superchips (72 B200 GPUs and 36 Grace CPUs) into a single, massive compute unit. These systems are designed for training the largest frontier models from scratch.

NVIDIA DGX Spark & DGX Station: For personal AI supercomputing and accelerated development, Nvidia offers the DGX Spark (with GB10 Superchip) and DGX Station (with GB300 Blackwell Ultra chip), bringing serious AI power to individual researchers and smaller teams.

Cloud Deployments (e.g., AWS EC2 P6e-GB200 UltraServers): Major cloud providers like AWS are rapidly deploying Nvidia Blackwell-powered instances (e.g., EC2 P6e-GB200 UltraServers). These offer on-demand access to massive Blackwell clusters, complete with high-bandwidth networking (Elastic Fabric Adapter with GPUDirect RDMA) and robust infrastructure, making cutting-edge AI supercomputing accessible without the need for large capital investments.

The Nvidia Ecosystem Advantage:

Beyond the raw hardware, Nvidia’s unparalleled leadership in AI supercomputing in 2025 is solidified by its comprehensive ecosystem:

CUDA: The industry-standard programming model, providing a vast array of libraries, tools, and a massive developer community.

NVIDIA AI Enterprise Software: A suite of optimized frameworks, libraries (cuDNN, TensorRT), and pre-trained models (like those from the NeMo framework) that accelerate AI development and deployment.

NVLink & Networking: Nvidia’s advanced interconnect technologies (NVLink, NVLink Switch) and high-speed networking (Quantum-X800 InfiniBand, Spectrum-X800 Ethernet) ensure seamless, low-latency communication across thousands of GPUs, critical for scalable AI training.

Omniverse and Simulation: Nvidia’s investments in digital twins and simulation environments are crucial for training AI for robotics, autonomous systems, and scientific discovery, bridging the gap between virtual and real worlds.

In 2025, for any organization pushing the boundaries of AI, Nvidia’s Blackwell GPUs and the integrated Grace Blackwell Superchips are the foundational building blocks for next-generation AI supercomputing, delivering unprecedented performance, scalability, and efficiency.