NVIDIA’s Blackwell GPU architecture is a monumental achievement in the realm of accelerated computing, specifically engineered to dominate the demanding landscape of artificial intelligence (AI) and high-performance computing (HPC). Named after mathematician David Blackwell, this architecture sets new benchmarks in performance, scalability, and efficiency.

Here’s a detailed look at its key features and performance capabilities:

Core Features of NVIDIA Blackwell GPUs

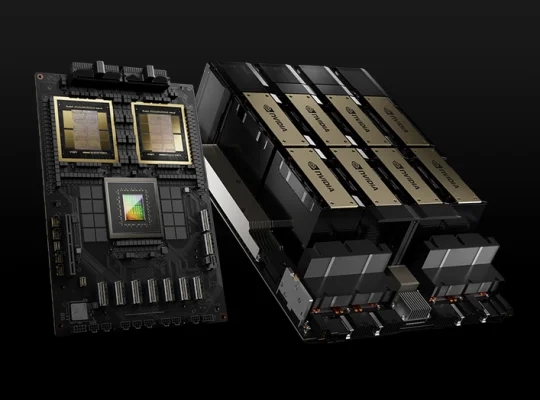

1. Revolutionary Dual-Die Design (GB200)

The World’s Most Powerful Chip: The Blackwell B200 GPU features an unprecedented 208 billion transistors, making it the largest and most complex chip ever created. It achieves this by cleverly integrating two independent dies, each approaching the reticle limit (the maximum size a chip can be manufactured), into a single, cohesive GPU.

NV-High Bandwidth Interface (NV-HBI): These two dies are seamlessly connected by a 10 TB/s (terabytes per second) NV-HBI link. This high-speed interconnect ensures full cache coherency and unified memory access across both dies, making them function as one powerful, unified GPU. This innovative design allows NVIDIA to scale performance beyond the physical limitations of a single large chip.

2. Second-Generation Transformer Engine with FP4

AI for AI: Blackwell’s second-generation Transformer Engine is specifically optimized for the “Transformer” architecture, which underpins modern large language models (LLMs) and Mixture-of-Experts (MoE) models.

New Precision Formats: It introduces native hardware support for MXFP4 (4-bit floating point) and MXFP6 (6-bit floating point) micro-scaling formats. This is a game-changer for AI inference, as FP4 significantly reduces memory footprint and computational requirements, enabling much larger models to be run with lower memory and power demands while maintaining accuracy.

Dynamic Range Management: Advanced dynamic range management algorithms and fine-grain scaling techniques (micro-tensor scaling) optimize performance and accuracy across a wide range of AI computations.

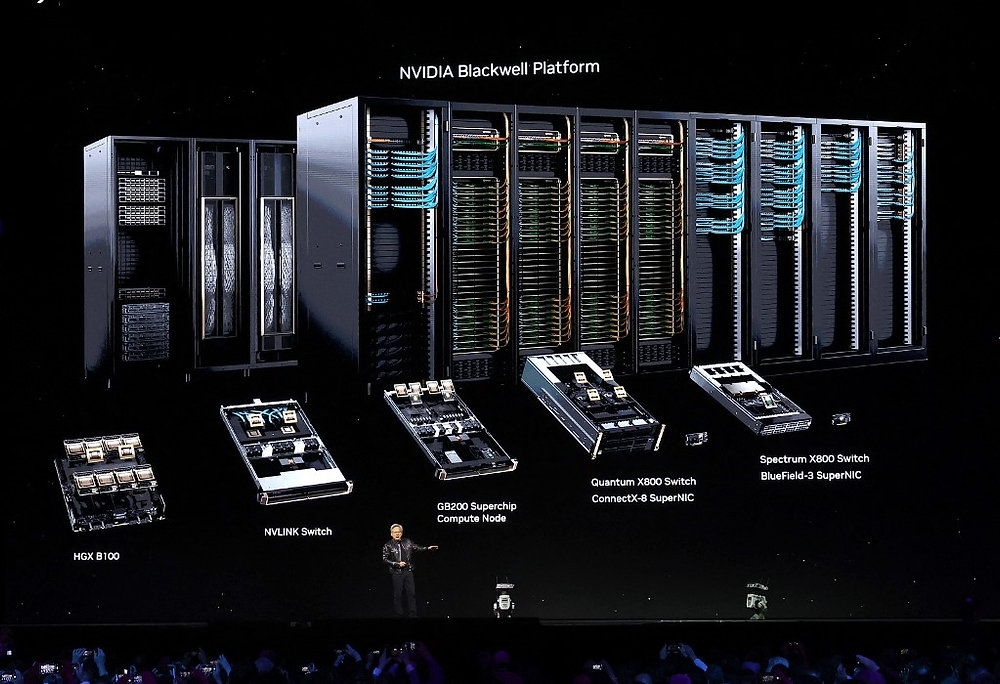

3. Fifth-Generation NVLink and NVLink Switch

Unprecedented Interconnect Bandwidth: NVLink-5 doubles the GPU interconnect speed to an astonishing 1.8 TB/s per GPU, significantly improving data transfer rates between GPUs.

Scalability for Trillion-Parameter Models: The new NVLink Switch enables the creation of colossal GPU clusters, supporting up to 576 GPUs (as seen in the GB200 NVL72 system) that operate as a single, massive supercomputer. This eliminates communication bottlenecks that have historically limited the training and deployment of the largest AI models. With 130 TB/s of GPU bandwidth within an NVL72 domain, Blackwell sets a new standard for multi-GPU communication.

4. Massive HBM3e Memory Capacity and Bandwidth

192 GB of HBM3e Memory: Blackwell GPUs feature a substantial 192 GB of HBM3e (High Bandwidth Memory 3e), providing more than double the effective VRAM of previous generations like the H100.

8 TB/s Memory Bandwidth: This enormous memory capacity is complemented by an aggregate memory bandwidth of 8 TB/s. This is crucial for memory-bound AI workloads, allowing larger models and datasets to reside entirely on the GPU, drastically reducing data transfer overheads.

5. Dedicated Decompression Engine

Accelerated Data Processing: Blackwell includes a specialized Decompression Engine capable of accelerating data processing at up to 800 GB/s. This is approximately 6x faster than the NVIDIA H100 GPU and 18x faster than CPUs for database operations and data analytics. This feature significantly speeds up the preparation of large datasets for AI training and inference.

6. Confidential Computing and Secure AI

Enhanced Data Security: Blackwell extends Trusted Execution Environments (TEE) to the GPU, offering Confidential Computing capabilities. This provides robust security for sensitive AI workloads, protecting data and intellectual property even when running in shared environments. It’s vital for industries with strict data privacy regulations like healthcare and finance.

7. Reliability, Availability, and Serviceability (RAS) Engine

Optimized Uptime: A dedicated RAS engine leverages AI-driven predictive management to monitor hardware and software health, detect potential faults early, and prevent downtime. It provides in-depth diagnostics, fault isolation, and error correction, ensuring maximum system uptime and reducing operating costs for massive AI deployments.

Performance Capabilities

Blackwell GPUs deliver unparalleled performance gains across various AI and HPC workloads:

AI Inference: For large language models (LLMs), Blackwell can achieve up to 15x faster inference performance compared to the H100, especially when leveraging the new FP4 precision. For certain resource-intensive LLMs (e.g., 1.8 trillion-parameter Mixture-of-Experts models), it can deliver up to 30x speedup compared to Hopper. This makes real-time, highly complex generative AI applications economically viable.

AI Training: Blackwell offers significant training acceleration, often providing 3-4x faster training speedups compared to H100 in many cases. In MLPerf benchmarks, it has shown 2.0x performance improvement for GPT-3 pre-training and 2.2x for Llama 2 70B fine-tuning on a per-GPU basis.

Memory-Intensive Workloads: The massive 192 GB HBM3e and 8 TB/s bandwidth are critical for workloads that require large models or extensive data buffering, such as large sparse models or recommender systems, alleviating memory bottlenecks.

Scalability for Frontier Models: The fifth-generation NVLink and NVLink Switch enable seamless scaling for models with trillions of parameters, allowing hundreds of GPUs to function as a single, coherent unit, which was previously challenging due to interconnect limitations.

Energy Efficiency: Despite its immense power, Blackwell significantly improves performance per watt. NVIDIA claims up to 25x greater energy efficiency for certain complex generative AI models when comparing full rack systems (e.g., GB200 NVL72) against previous generations. This translates to substantial reductions in operational costs and a greener footprint for data centers.

NVIDIA’s Blackwell architecture is poised to be the driving force behind the next generation of AI breakthroughs, offering the raw power, unprecedented scalability, and efficiency required to tackle the most ambitious computing challenges.