NVIDIA’s Blackwell GPU architecture, particularly the B200 and GB200, sets a new benchmark for speed and efficiency in AI, especially for large language models (LLMs) and generative AI. When compared to offerings from AMD and Intel, Blackwell generally maintains NVIDIA’s leadership, though competitors are making strides in specific areas, particularly concerning price-performance and open ecosystems.

Here’s a breakdown of Blackwell’s speed and efficiency compared to AMD and Intel:

NVIDIA Blackwell vs. AMD Instinct MI300X/MI350X

NVIDIA Blackwell’s Advantages (B200/GB200):

Raw Performance (especially in lower precision): Blackwell’s B200/GB200 offers significantly higher raw computational power, particularly with its new FP4 precision and enhanced FP8 capabilities.

- Inference Speed: For large, complex models like GPT-MoE-1.8T, a GB200 system can achieve up to30x faster real-time inference compared to an H100 system. While direct comparisons to MI300X are less widely available from independent third parties for Blackwell, NVIDIA’s internal benchmarks and early MLPerf results indicate a substantial lead.

- Training Speed: Blackwell also shows significant gains in training, with systems like the GB200 NVL72 being 2.2x faster for Llama 3.1 405B training compared to a Hopper system.

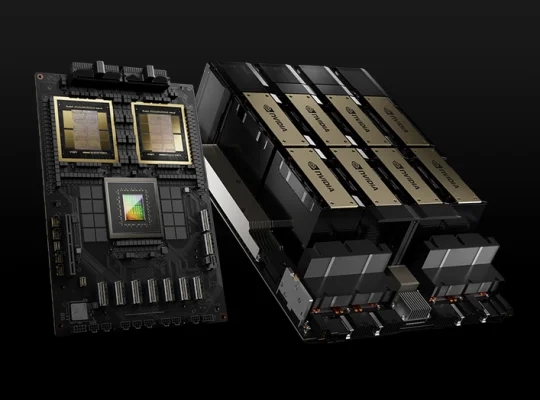

- Interconnect: NVIDIA’s 5th-gen NVLink provides1.8 TB/s bidirectional throughput per GPU, and with the NVLink Switch, it can connect up to 72 Blackwell GPUs as a single logical unit (GB200 NVL72), offering a massive 1.4 EFLOPS of AI performance and 30 TB of fast memory. This unified, high-bandwidth interconnect is a key differentiator for large-scale distributed AI workloads.

Software Ecosystem (CUDA): NVIDIA’s well-established CUDA platform and extensive software ecosystem (libraries, frameworks, developer tools) remain a major advantage. This provides mature, highly optimized solutions that are often difficult for competitors to match in terms of breadth and performance across various AI workloads.

Advanced Features: Blackwell introduces features like the second-generation Transformer Engine with FP4 support and a dedicated decompression engine, which are specifically tailored to accelerate LLM training and inference.

AMD Instinct MI300X/MI350X’s Strengths:

- Memory Capacity & Bandwidth (MI300X): The MI300X offers a substantial 192GB of HBM3 memory (comparable to Blackwell’s B200) with a bandwidth of 5.3 TB/s. The upcoming MI325X is expected to further boost bandwidth to 6 TB/s and capacity to 288 GB. This large memory capacity allows users to fit very large models entirely on a single card, reducing the need for model parallelism in some cases.

- Price-Performance: AMD often positions its Instinct accelerators as offering a superior price-performance ratio. Reports suggest that MI300X cards can be significantly cheaper (e.g., ~$12k-$15k) compared to NVIDIA’s H100 (and likely Blackwell, which is estimated to be ~$30k-$70k for B100/B200). This can translate to a much lower CapEx and potentially lower TCO for certain deployments.

- Energy Efficiency (for MI300X vs. H100): While Blackwell is very efficient per operation, the MI300X at 750W TDP is sometimes noted for its competitive power efficiency, especially when considering the large memory capacity it offers. AMD claims its MI350 series GPUs have exceeded energy efficiency goals, reaching 38x improvement over five years.

- Double Precision (FP64) Performance: Historically, AMD’s Instinct accelerators have shown stronger performance in double-precision (FP64) workloads, which are crucial for traditional HPC and scientific simulations, an area where NVIDIA might have slightly reduced focus with Blackwell in favor of AI-specific optimizations.

- Open Software Stack (ROCm): AMD champions an open-source software approach with ROCm, which can be attractive to developers seeking alternatives to NVIDIA’s proprietary CUDA. While ROCm has matured significantly, it still faces challenges in catching up to CUDA’s ecosystem breadth and optimization.

Overall Comparison (Blackwell vs. MI300X/MI350X):

For cutting-edge, large-scale AI training and inference, especially for LLMs that benefit from FP4/FP8 precision and massive multi-GPU scaling,NVIDIA Blackwell appears to maintain a clear performance lead. However, AMD’s MI300X/MI350X is a strong competitor for specific workloads, particularly where a large memory footprint per card is critical and for customers prioritizing a lower upfront cost or seeking an open software alternative.

NVIDIA Blackwell vs. Intel Gaudi 3

NVIDIA Blackwell’s Advantages:

- Absolute Performance: Blackwell GPUs significantly outpace Intel’s Gaudi 3 in raw AI compute power, especially with its new FP4 capabilities. While Intel claims Gaudi 3 can outperform NVIDIA’s H100 in certain benchmarks, Blackwell is the next generation beyond Hopper, designed for even greater scale and efficiency.

- Ecosystem and Market Dominance: NVIDIA’s established dominance in the AI accelerator market, backed by its comprehensive software stack (CUDA, cuDNN, etc.) and deep integration with major AI frameworks, provides a significant ecosystem advantage.

- Scalability (NVLink): NVIDIA’s NVLink interconnect and NVLink Switch technology allow for much tighter integration and higher bandwidth communication across tens or hundreds of GPUs, creating large, unified compute clusters that are currently unmatched by competitors for AI training.

Intel Gaudi 3’s Strengths:

- Price-Performance: Intel’s primary strategy with Gaudi 3 is aggressive pricing. An 8-card Gaudi 3 kit is priced significantly lower (e.g., $125,000) than comparable NVIDIA solutions (H100, and certainly Blackwell). Intel aims to offer a compelling “price-performance” advantage, often claiming it can be 2x more cost-effective for certain LLM inference workloads compared to H100.

- Open Ethernet Standard: Gaudi 3 leverages standard Ethernet for its interconnect (24 x 200GbE ports), which can be appealing to data centers looking to avoid proprietary interconnects and integrate more easily into existing network infrastructures. This contrasts with NVIDIA’s proprietary NVLink.

- Integrated Design: Gaudi 3 is designed with a system-on-chip approach that integrates the AI accelerators, HBM memory, and network interfaces directly on the chip, aiming for efficiency.

- Focus on Cost-Effective Inference: Intel openly acknowledges that it’s not directly chasing the absolute high-end training market dominated by NVIDIA. Instead, Gaudi 3 is specifically optimized for cost-effective inference of large language models and training of smaller to medium-sized models, appealing to enterprises seeking more economical AI deployment.

Overall Comparison (Blackwell vs. Gaudi 3):

NVIDIA Blackwell is the undisputed leader in peak AI performance and large-scale training capabilities, especially for the most massive LLMs. Intel Gaudi 3 positions itself as a strong value proposition, offering competitive performance for certain LLM inference and training tasks at a significantly lower cost, and appealing to customers who prioritize open standards and TCO. Intel faces an uphill battle to challenge NVIDIA’s established ecosystem and performance lead in the absolute high-end.

In summary:

- NVIDIA Blackwell: Focuses on absolute performance leadership, extreme scalability via NVLink, and pushing the boundaries of AI model size and complexity with innovations like FP4. It comes at a premium price.

- AMD Instinct MI300X/MI350X: Aims for strong performance, particularly with its large memory capacity, offering a competitive alternative to NVIDIA’s H100/H200, often with a better price-performance ratio and an open software stack. It’s a solid contender for many large-scale AI tasks.

- Intel Gaudi 3: Concentrates on price-performance for specific AI workloads (especially inference and mid-scale training) and promotes an open, Ethernet-based ecosystem as a key differentiator to attract customers seeking alternatives to NVIDIA’s dominant, but more expensive, solutions.

The market for AI accelerators is rapidly evolving, and while NVIDIA currently holds a significant lead, both AMD and Intel are innovating and carving out their niches by offering competitive solutions, particularly on the cost and open-source fronts.