Nvidia Blackwell GPU Speed and Efficiency Compared to AMD and Intel

Nvidia Blackwell vs. AMD Instinct & Intel Gaudi: A Comparative Look at AI Performance and Efficiency

The AI landscape is incredibly dynamic, with Nvidia, AMD, and Intel fiercely competing to deliver the most powerful and efficient accelerators for deep learning workloads. While Nvidia’s Blackwell architecture (e.g., B200, GB200) represents the cutting edge for general-purpose AI GPUs, AMD’s Instinct MI300X and Intel’s Gaudi 3 offer compelling alternatives, each with distinct strengths in terms of speed, efficiency, and cost-effectiveness.

It’s important to note that direct, apples-to-apples comparisons can be complex due to varying architectural approaches, precision support, and ecosystem maturity. However, we can highlight their respective positions.

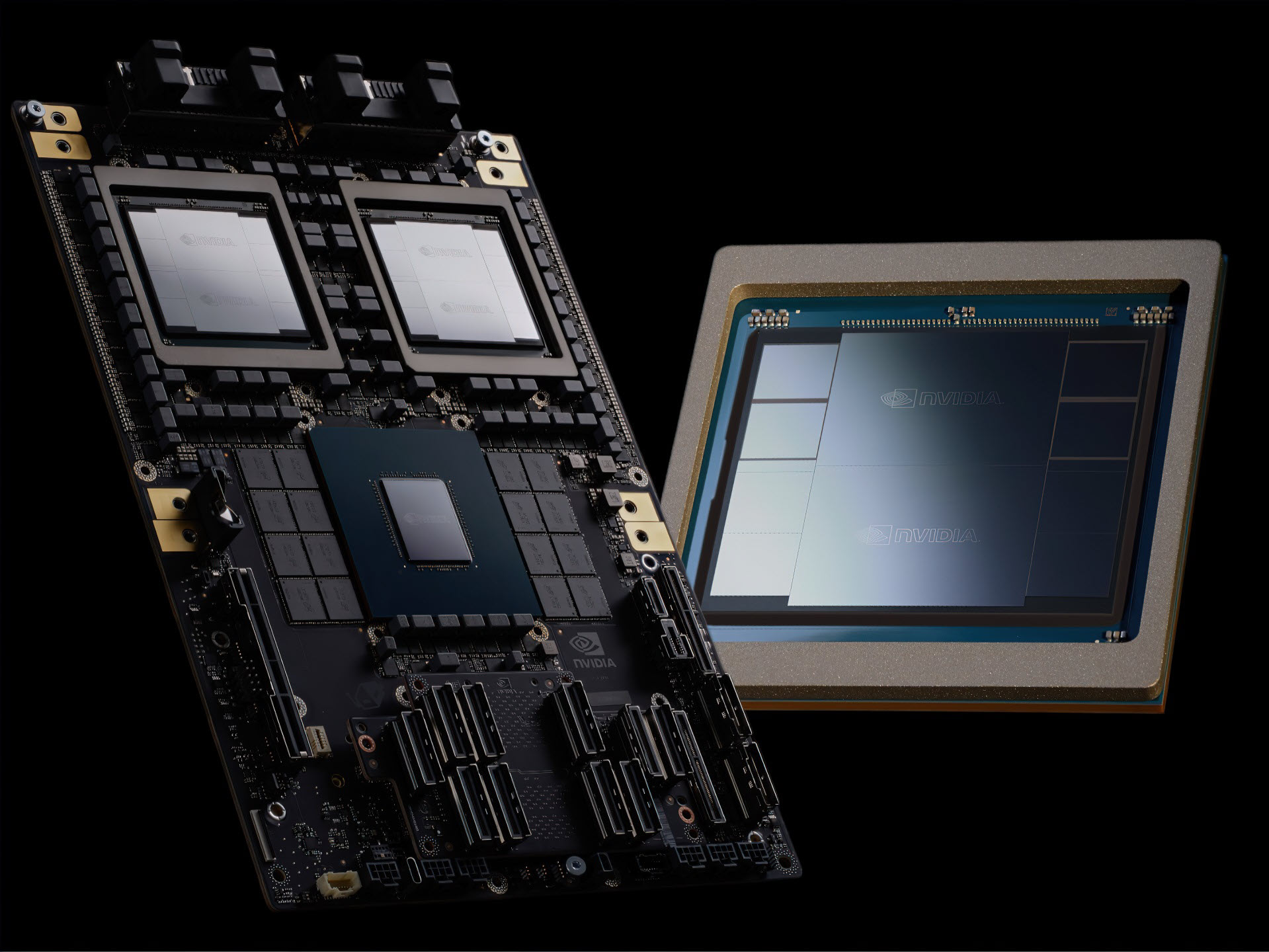

Nvidia Blackwell: The AI Performance King

Nvidia’s Blackwell architecture is designed forunprecedented raw performance and scalability for the largest and most demanding AI models, particularly generative AI and LLMs.

Speed:

Raw Throughput Leader: Blackwell’s fifth-generation Tensor Cores and second-generation Transformer Engine deliver a massive leap in FLOPS, especially with its new FP4 (4-bit floating point) precision. Nvidia claims up to 15x higher inference throughput for models like GPT-MoE-1.8T compared to its previous generation H100.

Low Latency Inference: For real-time applications requiring sub-100ms latency, Blackwell’s FP4 and advanced NVLink interconnect can be 2-4x faster than competitors.

Massive Scalability: The fifth-generation NVLink (1.8 TB/s) and NVLink Switch enable training and inference on models up to 10 trillion parameters across clusters of up to 72 GPUs (GB200 NVL72), functioning as a single logical GPU.

Efficiency:

Energy per Token: While Blackwell GPUs can draw significant power (up to 1200W for B200), their immense performance means a reduction in energy consumed per generated token compared to previous generations. This translates to lower operational costs at scale for certain workloads.

System-Level Efficiency: The GB200 Grace Blackwell Superchip integrates the GPU with Nvidia’s Grace CPU, offering a highly optimized and power-efficient compute node for large-scale AI.

Ecosystem Advantage:

Nvidia’sCUDA software platform remains the industry standard, offering unparalleled maturity, developer tools, libraries (cuDNN, TensorRT), and a vast community. This significantly reduces development time and optimizes performance for AI applications.

AMD Instinct MI300X: Strong Value and Memory Capacity

AMD’s Instinct MI300X, based on the CDNA 3 architecture, is a formidable competitor, focusing onhigh memory capacity and competitive price-performance, particularly for large models that can fit on a single card.

Speed:

Competitive Raw Compute: The MI300X offers substantial FP8 (8-bit floating point) performance, aiming to directly compete with Nvidia’s H100 and H200 generations. Some low-level benchmarks have even shown MI300X outperforming H100 in certain compute and cache metrics.

Excellent Inference for Large Models: With 192GB of HBM3 memory (matching Blackwell B200), the MI300X can host 70-150B parameter models entirely on a single card. This avoids the overhead of model splitting (tensor parallelism), leading to lower latency and potentially better performance for these specific use cases.

Efficiency:

Lower Power Draw: The MI300X typically has a lower TDP (750W) compared to Blackwell B200 (up to 1200W), which can result in better rack density and lower power consumption for a given number of cards, leading to TCO advantages.

Cost-Effectiveness: AMD often positions the MI300X with an aggressive pricing strategy (estimated $10k-$15k per card compared to Blackwell’s $30k-$40k+), offering a compelling “bang for the buck” for organizations looking for high memory capacity at a lower capital expenditure.

Ecosystem:

AMD’sROCm software platform is an open-source alternative to CUDA and has made significant strides in maturity and framework support (PyTorch, TensorFlow). While still catching up to CUDA’s breadth and optimization, it’s becoming a viable option, especially for those prioritizing open standards and cost.

Intel Gaudi 3: Cost-Optimized for Training and Inference

Intel’s Gaudi 3 (from Habana Labs) focuses on delivering acost-effective solution for AI training and inference, particularly for enterprises seeking alternatives to Nvidia’s premium offerings.

Speed:

Strong H100 Competition: Intel claims Gaudi 3 can offer comparable or even better performance than Nvidia’s H100 for certain training tasks and LLM inference scenarios (e.g., up to 50% faster training than H100).

Dedicated AI Architecture: Gaudi 3’s architecture is optimized for AI workloads, featuring a large number of Tensor Processing Cores (TPCs) and a high-bandwidth on-chip memory.

Memory Capacity: With 128GB of HBM2e memory and 96MB of onboard SRAM, it offers substantial memory for generative AI datasets.

Efficiency:

Price-Performance Advantage: Intel’s key differentiator is its aggressive pricing. Gaudi 3 is generally positioned as significantly more cost-effective than Nvidia’s H100 (and likely Blackwell), aiming to provide a better price-per-performance ratio, especially for inference.

Power Efficiency: Intel also emphasizes competitive power efficiency, with Gaudi 3 often having a lower TDP (around 600W) than top-tier Nvidia and AMD GPUs

Ecosystem:

Intel’sSynapseAI software stack integrates with popular frameworks like PyTorch and TensorFlow. While it may not have the same breadth or maturity as CUDA, it provides the necessary tools for deploying and optimizing AI models on Gaudi hardware.

Key Considerations and Decision Factors:

Workload Specificity:

Nvidia Blackwell: Ideal for cutting-edge research, training the largest foundation models (trillion-parameter scale), and high-throughput, low-latency inference for real-time AI services where ultimate performance is paramount.

AMD MI300X: Strong for training and inference of large (but not necessarily the absolute largest) models, particularly when single-card memory capacity and TCO are critical. Good for organizations building on-premise AI infrastructure seeking a competitive alternative.

Intel Gaudi 3: A compelling option for cost-conscious enterprises focused on efficient training and inference of open-source and task-specific LLMs, especially where high-volume deployments prioritize value over bleeding-edge peak performance.

Software Ecosystem:�

Nvidia’s CUDA remains the undisputed leader, offering the most mature and comprehensive software stack. AMD’s ROCm and Intel’s SynapseAI are improving rapidly but still require more effort for full optimization and may have fewer niche library integrations.

Total Cost of Ownership (TCO):

This includes not just the upfront hardware cost but also power consumption, cooling requirements, and the cost of engineering time for optimization. AMD and Intel often compete aggressively on TCO.

Availability and Supply Chain:

Nvidia currently holds the lion’s share of the AI chip market, but AMD and Intel are actively ramping up production and securing partnerships.

In summary, while Nvidia Blackwell sets the benchmark for raw AI performance and scalability, AMD’s MI300X and Intel’s Gaudi 3 provide robust and often more cost-effective alternatives, pushing the envelope in areas like memory capacity and price-performance. The choice between them often depends on the specific AI workload, budget constraints, and an organization’s existing software ecosystem.