NVIDIA’s Blackwell GPU architecture represents a significant leap forward compared to its predecessors, Hopper (H100/H200) and Ampere (A100), particularly in the realm of AI and high-performance computing (HPC). Here’s a breakdown of the key improvements and features:

1. Architecture and Transistor Count:

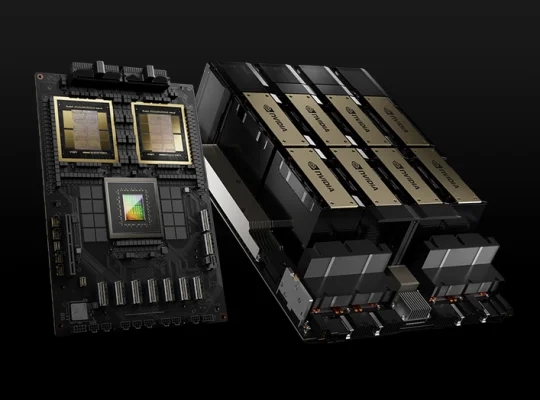

Blackwell (B200/B100): A dual-die design, essentially combining two large dies into a single package, acting as one unified CUDA GPU. The B200 boasts 208 billion transistors (104 billion per die), a substantial increase over previous generations. It leverages TSMC’s 4NP process, an enhancement of the 4N node.

Hopper (H100): Features a monolithic die with 80 billion transistors, built on TSMC’s 4N process.

Ampere (A100): Built on TSMC’s 7nm process, with a lower transistor count compared to Hopper and Blackwell.

2. Performance Uplift, Especially for AI:

AI Training & Inference: Blackwell is designed specifically for the generative AI era, delivering massive performance gains.

Inference: For large language models (LLMs), Blackwell can achieve up to 15x inference performance compared to H100, largely due to the introduction of FP4 precision and its ability to handle larger models with lower memory footprints. For example, a single B200 can achieve up to 9 PFLOPS in FP8, compared to ~4 PFLOPS on H100.

Training: Blackwell offers~3-4x training speedups in many cases. In MLPerf Training benchmarks, Blackwell has shown 2x performance for GPT-3 pre-training and 2.2x for Llama 2 70B fine-tuning on a per-GPU basis compared to Hopper.

Memory Bandwidth and Capacity:

Blackwell: Features 192 GB of HBM3e memory, more than double the effective VRAM of the H100 (80 GB). Its aggregate memory bandwidth soars to 8 TB/s, which is ~2.4x the H100’s bandwidth (3.2 TB/s) and even 66% more than the H200 (~4.8 TB/s). This is crucial for memory-bound ML workloads and handling larger models.

Hopper (H100/H200): H100 offers 80 GB of HBM3, while the H200 improves on this with 141 GB of HBM3e and higher bandwidth.

Ampere (A100): Typically offered with 40GB or 80GB of HBM2 memory.

3. New Features and Architectural Enhancements:

Second-Generation Transformer Engine: Blackwell’s Transformer Engine is significantly enhanced, adding support forMXFP4 and MXFP6 micro-scaling formats. This allows for double the effective throughput and model size for inference while maintaining accuracy, crucial for trillion-parameter models.

Fifth-Generation NVLink and NVLink Switch:

Blackwell: Doubles the GPU interconnect speed to 1.8 TB/s with NVLink-5. The NVLink Switch allows for scaling to much larger GPU clusters (up to 576 GPUs) with significantly reduced communication overhead and improved efficiency in multi-GPU training.

Hopper: Introduced NVLink Network for GPU-to-GPU communication among up to 256 GPUs with 900 GB/s bidirectional throughput.

Ampere: Featured third-gen NVLink with 600 GB/s aggregate bandwidth.

Multi-Die GPU Design (NV-High Bandwidth Interface – NV-HBI): Blackwell’s hallmark feature is its chiplet design, where two dies are connected with a 10 TB/s link (NV-HBI), allowing them to act as a single, coherent GPU. This overcomes reticle limits and enables greater transistor density.

Dedicated Decompression Engine: Blackwell includes a new Decompression Engine that accelerates data processing by up to 800 GB/s, making it significantly faster (6x faster than H100) for database operations and data analytics.

Confidential Computing and Secure AI: Blackwell extends Trusted Execution Environments (TEE) to GPUs, providing enhanced security for sensitive AI workloads and protecting intellectual property.

FP4 Support: A major advancement for inference, FP4 (4-bit floating point) significantly reduces memory requirements and can double performance compared to FP8, allowing for even larger models to be deployed more economically.

Increased TGP: To achieve its massive performance, the B200 has a higher Total Graphics Power (TGP) of 1000W, compared to 700W for the H100. Despite this, performance per watt for large deployments has improved significantly.

In essence, Blackwell represents NVIDIA’s aggressive push to meet the escalating demands of generative AI, offering unprecedented performance, memory capabilities, and scalability through a combination of innovative architectural design, new data types, and enhanced interconnectivity.