NVIDIA plays a unique role in the cloud computing landscape, primarily as a provider of the specialized hardware (GPUs) and software platforms that power advanced workloads, especially in AI, machine learning, and high-performance computing (HPC). While AWS, Google Cloud, and Azure are comprehensive cloud service providers offering a vast array of services, NVIDIA’s “cloud computing” offerings are more focused on enabling these computationally intensive tasks within or across those hyperscale clouds, and also through specialized partners.

Here’s a breakdown of NVIDIA’s approach versus the major cloud providers:

NVIDIA’s Cloud Computing Focus:

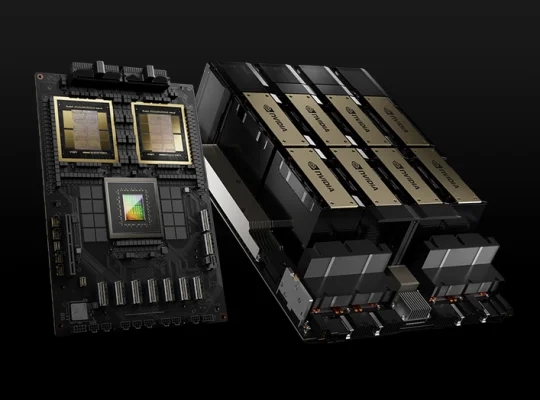

GPU Hardware and Ecosystem: NVIDIA is the undisputed leader in high-performance GPUs (e.g., A100, H100, L40S, T4) which are essential for AI model training, inference, and complex simulations. Their core cloud offering revolves around making these GPUs accessible and optimized.

DGX Cloud: This is NVIDIA’s fully managed AI training platform. Instead of building their own massive data centers from scratch to compete directly with AWS, Azure, and Google Cloud, NVIDIA partners with these and other cloud providers (like Oracle Cloud Infrastructure, Azure, and GCP) to offer DGX Cloud instances. These instances are pre-configured with NVIDIA’s hardware (DGX systems with multiple GPUs), software stack (NVIDIA AI Enterprise, Base Command), and optimized libraries, providing a “day-one productivity” experience for large-scale AI development.

Software and Platforms:

- NVIDIA AI Enterprise: A comprehensive software suite for AI development and deployment, optimized for NVIDIA GPUs. This can be deployed on various cloud platforms.

- NVIDIA Omniverse Cloud: A suite of services for building and operating industrial metaverse applications, digital twins, and simulations. It leverages NVIDIA’s OVX and HGX platforms and the Graphics Delivery Network (GDN) to provide high-performance, low-latency collaboration and simulation capabilities in the cloud. Omniverse Cloud APIs also enable integration with existing design and automation software.

CUDA, cuDNN, TensorRT, etc.: These are foundational NVIDIA software tools and libraries that developers use to accelerate AI and HPC workloads on GPUs, regardless of where those GPUs are hosted.

Specialized Cloud Partners (“Neoclouds”): NVIDIA also invests in and partners with specialized GPU cloud providers like CoreWeave, Lambda Labs, and Paperspace. These “neoclouds” focus solely on offering GPU infrastructure and often provide competitive pricing and availability for high-demand GPU instances.

Focus on AI/ML and HPC: NVIDIA’s cloud strategy is heavily geared towards accelerating AI, machine learning, deep learning, data analytics, and high-performance computing workloads that demand significant parallel processing power.

AWS, Google Cloud, and Azure (Hyperscale Cloud Providers):

These are comprehensive cloud platforms offering a vast array of services beyond just GPUs:

Broad Service Portfolios: They provide everything from compute (CPUs, GPUs, TPUs), storage (object, block, file), networking, databases (relational, NoSQL), analytics, IoT, security, developer tools, and much more.

GPU Instance Offerings: All three major cloud providers offer a wide range of GPU-accelerated virtual machine instances powered by NVIDIA GPUs (A100, H100, V100, T4, etc.). They compete on instance types, pricing, availability, and network interconnects (like NVSwitch and InfiniBand for multi-GPU setups).

- AWS: Offers various EC2 instances (P-series, G-series) with NVIDIA GPUs. Known for its broad range and global infrastructure. Has services like SageMaker for managed ML workflows.

- Microsoft Azure: Provides N-Series Virtual Machines with NVIDIA GPUs. Strong integration with Microsoft enterprise tools and a focus on hybrid cloud strategies. Azure OpenAI Service provides API access to OpenAI’s GPT models.

- Google Cloud (GCP): Offers NVIDIA GPUs (A2 instances with A100s) and also their own custom-designed Tensor Processing Units (TPUs) which are highly optimized for TensorFlow and specific deep learning workloads. Strong in data analytics and MLOps with Vertex AI.

General Purpose vs. Specialized: While they offer excellent GPU capabilities, their overall business model is to be a general-purpose cloud platform that can host almost any application, from web servers to large-scale data warehouses.

Control and Customization: These platforms offer a high degree of control over the infrastructure, allowing users to build highly customized solutions. However, this often requires more hands-on DevOps and infrastructure management.

Key Differences and Overlap:

NVIDIA as an Enabler: NVIDIA primarily provides the underlyingtechnology (GPUs, software stack) that the hyperscale cloud providers integrate into their broader offerings. NVIDIA’s DGX Cloud is a direct offering, but it often runs on the infrastructure of these major clouds.

Focus vs. Breadth: NVIDIA’s cloud focus is specialized on AI/ML and advanced simulation, providing highly optimized environments. AWS, Google Cloud, and Azure offer a much broader set of services for all types of workloads.

Managed Experience: NVIDIA’s DGX Cloud aims to offer a highly managed and optimized experience for specific AI workloads, reducing the complexity of setting up and managing GPU infrastructure. The hyperscale clouds offer managed services for many areas, but often require more integration effort for complex multi-GPU setups.

Hardware Ownership vs. Rental: NVIDIA designs and sells the hardware; the cloud providers rent out instances of that hardware (and often their own custom chips like TPUs for Google or custom AI accelerators for Azure).

Omniverse as a Vertical Solution: Omniverse Cloud is a distinct offering that targets specific industries for digital twin and simulation use cases, often leveraging the underlying cloud GPU infrastructure from various providers.

In essence, if you need raw GPU power and an optimized NVIDIA software stack for AI/ML or HPC, you might consider NVIDIA’s DGX Cloud directly or a specialized “neocloud” provider. However, if you need a comprehensive cloud environment with a wide range of services, robust networking, storage, databases, and more, then AWS, Google Cloud, or Azure are the primary choices, and they will offer NVIDIA GPUs as part of their compute instances.