Top AI Supercomputers Powered by NVIDIA’s Latest Technologies

NVIDIA stands at the forefront of the AI revolution, consistently pushing the boundaries of what’s possible with their cutting-edge GPU architectures and integrated supercomputing platforms. Their latest innovations are powering the next generation of AI research, development, and deployment across diverse industries.

Here’s a look at some of the top AI supercomputers and technologies leveraging NVIDIA’s newest advancements:

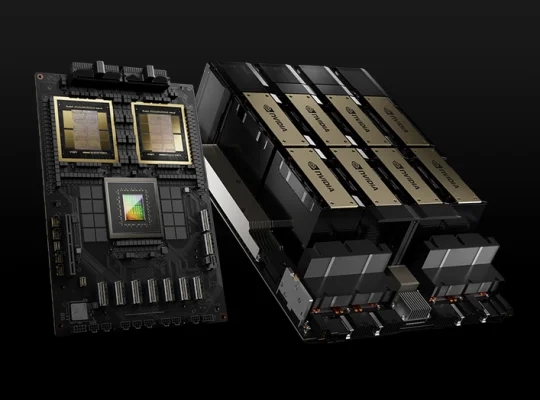

1. NVIDIA Grace Blackwell Superchips (GB200/GB300)

The Grace Blackwell superchip (GB200) is NVIDIA’s latest “miracle” in AI supercomputing. This revolutionary chip combines two Blackwell B200 GPUs with a Grace CPU, interconnected through NVLink to create a unified memory domain. Key highlights include:

Unprecedented Performance: Delivers up to 30 times the inference performance of previous generations, along with significant gains in power efficiency.

Massive Scalability: The architecture allows scaling up to 512 GPUs within a single NVLink domain, enabling massive parallel processing for demanding AI workloads, particularly Large Language Models (LLMs).

Liquid Cooling: Utilizes liquid-cooled racks and optimized data processing capabilities for enhanced power efficiency.

Mass Production: NVIDIA is mass-producing these systems at an unprecedented scale of 1,000 per week, addressing the surging global demand for AI infrastructure.

GB300 NVL72: This is a rack-scale system featuring 72 Blackwell Ultra cards built on the GB300 NVL72 platform, delivering astonishing performance for dense FP4 inference and FP8 training. CoreWeave has already deployed these for their cloud AI services.

2. NVIDIA DGX Systems: AI Supercomputing for Every Scale

NVIDIA’s DGX platform remains a cornerstone for enterprise AI development and deployment, now featuring the latest Blackwell architecture. These systems bring supercomputing power directly to researchers and organizations.

NVIDIA DGX SuperPOD: These are full-scale AI supercomputers built using interconnected DGX systems. An example is Gefion, Denmark’s first AI supercomputer, powered by 1,528 NVIDIA H100 Tensor Core GPUs and interconnected using NVIDIA Quantum-2 InfiniBand networking. Gefion is accelerating research in areas like quantum computing, drug discovery, and green energy.

NVIDIA DGX Spark (Project Digits): This compact, desktop-friendly AI supercomputer is designed for researchers, data scientists, and students. Powered by a Grace Blackwell Superchip, it offers up to a petaFLOP of computing performance. It’s portable enough to fit in a bag and aims to bring AI supercomputing capabilities to millions of developers.

NVIDIA DGX Station: A more powerful sibling to the Spark, the DGX Station is a professional workstation-sized supercomputer. Built around the GB300 Grace Blackwell Ultra Desktop Superchip, it features massive coherent memory and blistering network connectivity, designed for large-scale AI training and inferencing workloads on your desktop.

3. Project Ceiba (AWS and NVIDIA Collaboration)

A groundbreaking collaboration between AWS and NVIDIA, Project Ceiba aims to build one of the world’s fastest AI supercomputers in the cloud.

Immense Scale: Project Ceiba will feature 20,736 NVIDIA GB200 Grace Blackwell Superchips, scaling to 20,736 Blackwell GPUs connected to 10,368 NVIDIA Grace CPUs. This configuration is projected to process a staggering 414 exaflops of AI, making it orders of magnitude more powerful than current top supercomputers.

Cloud-Native AI: Hosted exclusively on AWS, Project Ceiba will leverage NVIDIA’s DGX Cloud architecture, providing a scalable AI platform for developers.

Advanced Networking & Cooling: It’s the first system to utilize fourth-generation AWS Elastic Fabric Adapter (EFA) networking for unprecedented low-latency, high-bandwidth throughput, and pioneers the use of liquid cooling at scale in data centers for enhanced efficiency.

4. NVIDIA HGX Platform

The HGX platform continues to be a core component of NVIDIA’s data center offerings, specifically designed for AI and High-Performance Computing (HPC). Systems built on the HGX platform incorporate NVIDIA’s powerful GPUs and networking technologies to deliver exceptional performance for the most demanding AI workloads.

Beyond the Hardware: NVIDIA’s AI Software Ecosystem

NVIDIA’s dominance in AI supercomputing isn’t just about powerful hardware. Their comprehensive software ecosystem, including:

CUDA: The foundational platform for massively parallel programming on GPUs.

NVIDIA AI software stack: Including frameworks like NVIDIA Isaac for robotics, NVIDIA Metropolis for vision AI, and NVIDIA Holoscan for sensor processing.

NVIDIA Omniverse Replicator: For synthetic data generation.

NVIDIA TAO Toolkit: For fine-tuning pretrained AI models.

This robust software stack enables developers and researchers to fully harness the immense power of NVIDIA’s supercomputing platforms, accelerating advancements in large language models, generative AI, scientific research, autonomous systems, and more.

NVIDIA’s continuous innovation in both hardware and software is driving the future of AI, making increasingly complex and powerful AI models a reality.